2022-3-13: InstructGPT, Model soups, muParameterization, LiteTransformerSearch

Training language models to follow instructions with human feedback

The InstructGPT paper. “Starting with a set of labeler-written prompts and prompts submitted through the OpenAI API, we collect a dataset of labeler demonstrations of the desired model behavior, which we use to fine-tune GPT-3 using supervised learning. We then collect a dataset of rankings of model outputs, which we use to further fine-tune this supervised model using reinforcement learning” Also “outputs from the 1.3B parameter InstructGPT model are preferred to outputs from the 175B GPT-3, despite having 100x fewer parameters. Moreover, InstructGPT models show improvements in truthfulness and reductions in toxic output generation while having minimal performance regressions on public NLP datasets.” So basically, reducing alignment problem to supervised learning of human preferences works pretty well, as measured by being as “aligned” as a 100x larger model without such training.

Region-of-Interest Based Neural Video Compression

Exploiting observation that distortion matters to different extents in different parts of an image/video to get better rate-distortion tradeoff (at least with a distortion metric that cares more about certain parts of the image). They need an ROI mask to tell the model what to allocate more bits to, so basically you can only compress semantic segmentation datasets. Although random smooth “synthetic ROI masks” work too for training (though they didn’t try it for test). Better pareto frontier for neural video compression, but under very strong assumptions.

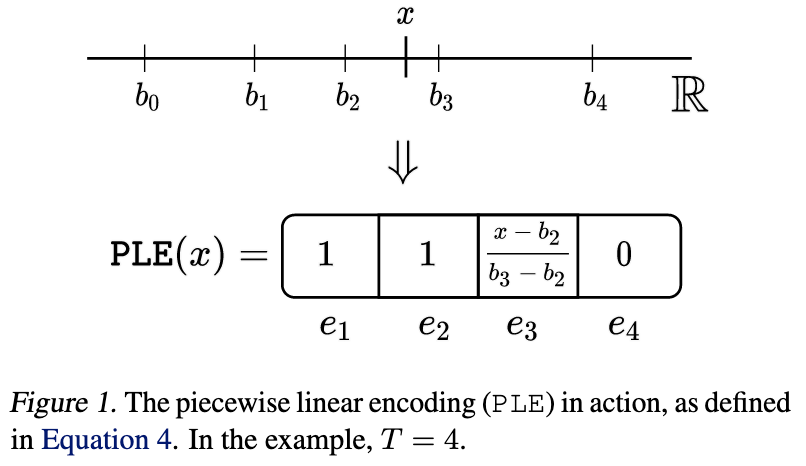

On Embeddings for Numerical Features in Tabular Deep Learning

They get MLPs and transformers to work about as well as gradient boosted decision trees for various problems by designing better encodings of numeric features. Two encodings work well:

indicator vectors for whether the number exceeds each of a sequence of thresholds, with a value between 0-1 for the interval it lies within (in proportion to where in the interval it is).

sinusoidal features, with trainable frequency coeffs.

Increases my conviction that one could design a better tokenizer for NLP, esp. for handling numeric inputs.

An Empirical Study of Low Precision Quantization for TinyML

Qualcomm people benchmarked a bunch of post-training quantization algorithms in a unified benchmark + codebase. No clear winner, but they can often quantize losslessly down to 8 bits or less, depending on the model and task. Not super surprising, but always good to see an apples-to-apples comparison across methods.

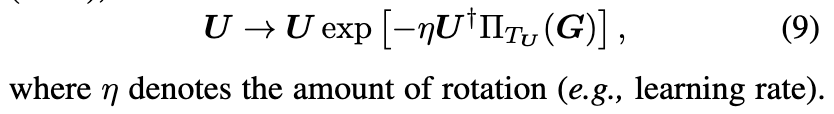

projUNN: efficient method for training deep networks with unitary matrices

Two variants: projUNN-D just does a normal weight update, and then projects to nearest unitary mat (in Frobenius norm) using first equation below. Needs a pseudoinverse and matrix square root to do so, but it is a closed-form update. ProjUNN-T instead projects the gradient into the tangent space of the current unitary weight matrix (2nd equation), and then rotates the weight matrix U in this direction (3rd equation). They constrain the matrix G to be low-rank, which speeds this all up a lot (although it’s still much slower than just not making the weights unitary). They also try out randomized linear algebra (e.g., column sampling) to speed it up more. Overall, I’m not convinced this is worth the time cost, but I wish it was because unitary matrices make your (square) linear layers invertible, basically solve numerical stability, and are just generally super nice.

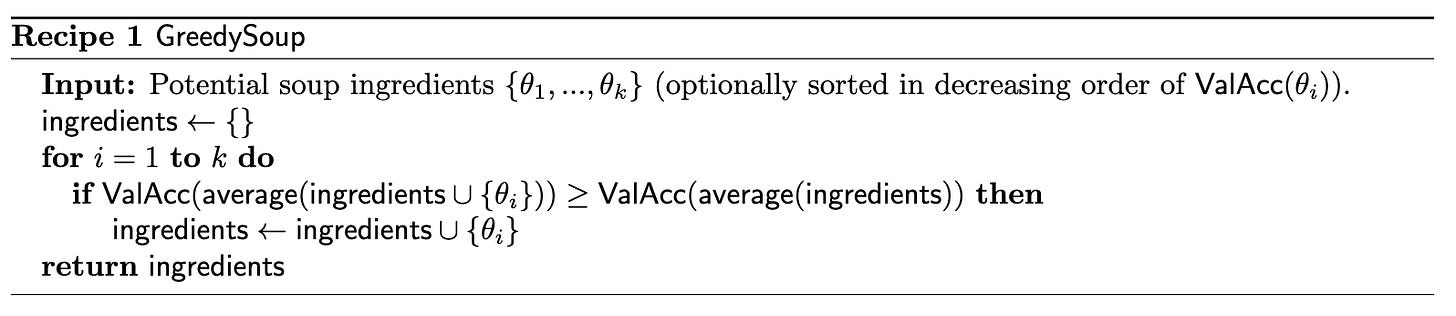

⭐ Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time

Instead of just training one fine-tuned model or selecting only one fine-tuned model after an hparam search, average together the weights from a bunch of fine-tuned models (all starting from the same initialization). Consistently helps accuracy at iso inference cost, since only parameter values change.

Two approaches to combining the models: 1) “GreedySoup”, which just iteratively tries averaging in the weights from each model and keeping any updates that help validation accuracy. 2) “Learned Soup” procedure is to directly learn a convex combo of the all the fine-tuned models’ weights by gradient descent. Computationally simple but means you have to materialize all the models in RAM at once. They also tried a variant of Learned Soup where the coeffs are learned for each layer instead of for the whole network, which worked significantly better in-distro but was worse OOD.

Interesting implications: 1) model soups offer a means of turning more training compute into more accuracy for fine-tuning. Even if the world moves to only fine-tuning foundation models, fine-tuning a large model a dozen times could still be a lot of training spend. 2) This is a different workflow than just fine-tuning a model once, or just keeping the best fine-tuned model so far, suggesting possible demand for more complex training pipelines.

HyperMixer: An MLP-based Green AI Alternative to Transformers

They use a hypernetwork that takes in each input token’s embedding plus an original-Transformer-style position embedding, and outputs an DxN weight matrix to mix all the tokens. And it only kinda seems to work better than vanilla transformer, maybe, and that’s even with their method using GELU in the mixing. It does get a lot faster for long sequence lengths because of the linear complexity, but they don’t compare to other linear attention alternatives. Overall I wasn’t sure what to take away from this paper.

ActiveMLP: An MLP-like Architecture with Active Token Mixer

On the one hand, they get high ImageNet accuracies given their FLOP and param counts (e.g., 82% Top1 at 27M params, which 6% more accuracy for ~2 million more params than a ResNet-50). On the other hand, the way their module works seems like FLOPs hacking. They have a submodule that spits out horizontal and vertical offsets (tied across groups of layers) to gather from for each token, so they have a gather with dynamic indices for each input position. They combine (by concatenating?) the original vector, the vertical-gathered vector, and the horizontal-gathered vector. I’m not sure how they got the index generation to be differentiable. So...this might actually be a good idea, but it’s hard to say given the lack of speed numbers + the large number of possible confounders when introducing an entirely new architecture.

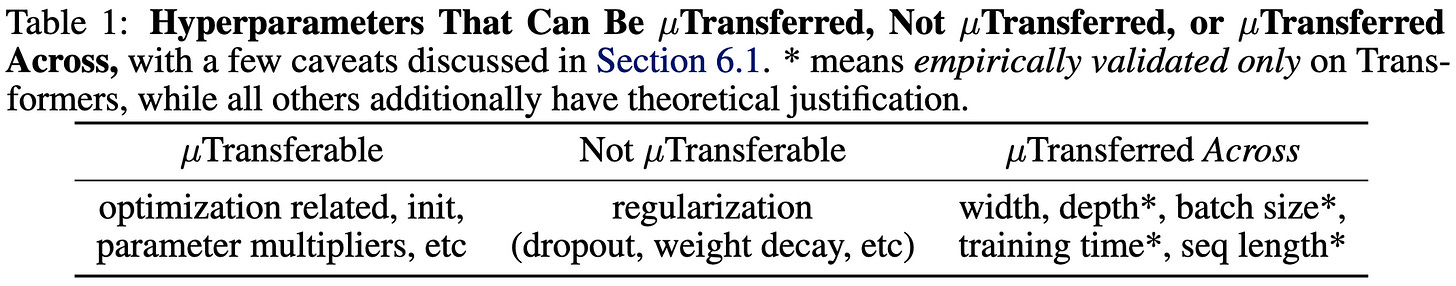

⭐ Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer

They figured out how to reliably pick hparams for large models using only training runs on small models. “For example, 1) by transferring pretraining HPs from a model of 13M parameters, we outperform published numbers of BERT-large (350M parameters), with a total tuning cost equivalent to pretraining BERT-large once; 2) by transferring from 40M parameters, we outperform published numbers of the 6.7B GPT-3 model, with tuning cost only 7% of total pretraining cost” pip install mup to use it. Reason to think they nailed the way to reason about hparam transfer: "we argue in Appendix J.3 that μP should also be the unique parametrization that allows HP transfer across width.” and "we observe, for any fixed HP combination, training performance never decreases with width in μP, in contrast to [standard parameterization]”.

⭐ LiteTransformerSearch: Training-free On-device Search for Efficient Autoregressive Language Models

Okay, this is really cool. "We rigorously show that the latency and perplexity pareto-frontier can be found without need for any model training, using non-embedding parameters as a proxy for perplexity” and “We evaluate our method, dubbed Lightweight Transformer Search (LTS), on diverse devices from ARM CPUs to Nvidia GPUs and show that the perplexity of Transformer-XL can be achieved with up to 2× lower latency.” They basically just have some search algorithm that generates candidate architectures, and they combine real latency measurements on target hardware with parameter count as a proxy for accuracy. Using decoder-only Transformer-XL as backbone for the decoder-only architectures.